AI: Impending Dark Age for Technical Writing... Or Renaissance of Useful Output?

No one knows where AI will ultimately take us. My strategy for enduring–and perhaps even thriving–in the next 5 years and beyond + how society and education will change after "the churn"

TL;DR: Write about YOUR primary experience, in YOUR OWN voice. Don’t depend on reporting on other people’s work where AI can do things cheaper and better.

My Job, for the last 8 years or so, has involved a number of endeavors, from designing a new drone camera mounting system, to multiple successful Kickstarters, to writing about my projects and what I’ve learned on publications like Embedded Computing Design.

However, much of how I’ve actually made a living involves summarizing information from other people’s projects in an easily digestible format, e.g. most of my articles on Hackster.io. Given my massive curiosity and rather broad technical expertise–reinforced by this writing process–I’m ideally suited to this sort of read-understand-summarize output. The work is flexible, can pay well, and isn’t too hard.

Do you know “who” else is good at the read-understand-summarize job? AI, or ChatGPT if you want to get specific, and it’s infinitely cheaper/faster than myself. Even if it gets things wrong from time to time, I suspect it will only get better…1

But perhaps there still is hope for me and my ilk.

AI will take over some–not all–technical writing work

While the read-understand-summarize-maybe-keyword-stuff work has/will become less common, the portion of my work where I build a project and write about it, try out a new product for review, or write about a concept that requires my personal experience and/or the interviewing of actual people–i.e. primary research–appears to be alive and possibly even growing.

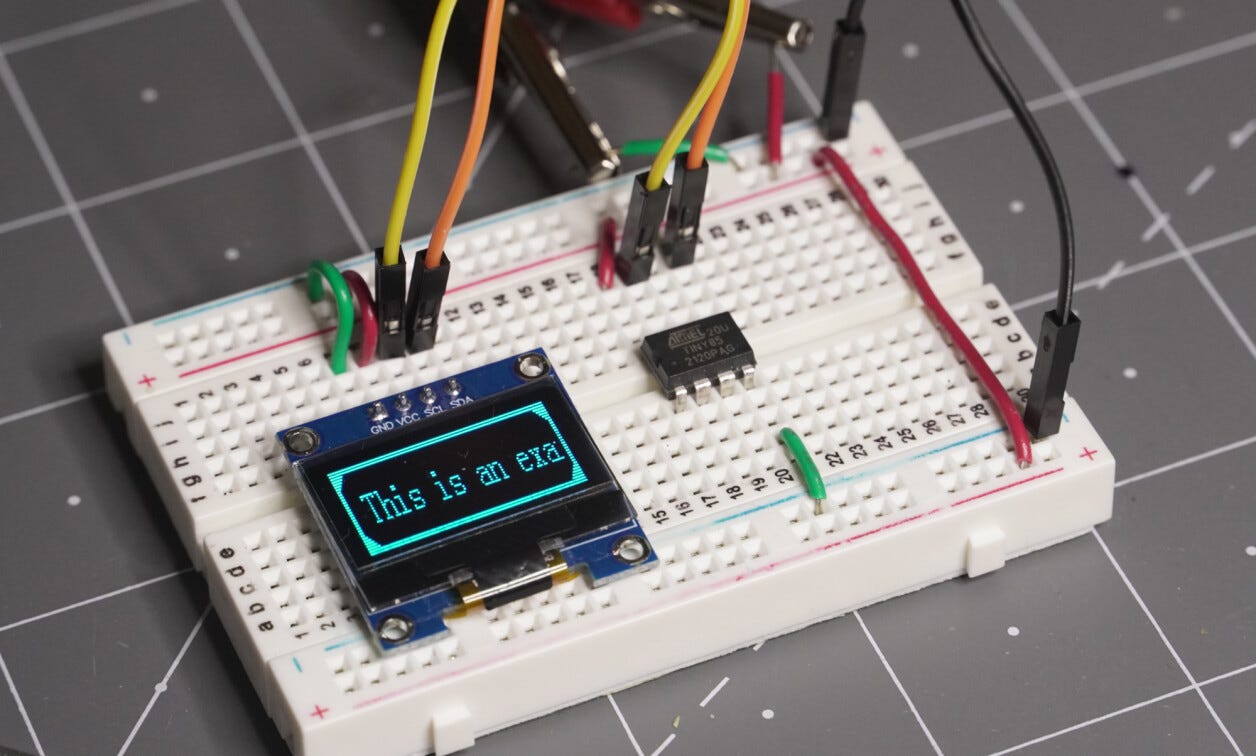

For example, one of my articles on Embedded Computing Design involved me figuring out how to use a certain microcontroller (ATtiny85) with a .96” I2C OLED screen that is very common in DIY electronics projects. The information was mostly out there, but I had to figure out what firmware worked well with this system, and poke around until it was functional. I then photographed it in action, which would be impossible for AI to reproduce, at least as things stand now.2

The great news is that (generally) love this sort of work, though it doesn’t currently fill my schedule by itself. I do still have some summary/research work available, giving me runway to continue to transition to more hands-on work. Notably, I’ve been doing a number of more hands-on, video-based projects for longtime customer Hackster.io.3 Of course, if you have any such projects that could use my expertise, feel free to get in touch: [hi@jeremyscook.com].

AI effect on education and human intelligence

If you’re a certain age, there’s a very good chance you wanted to be an archeologist and/or paleontologist per Indiana Jones and/or Jurassic Park…4 … When I originally wrote that I took a break and now don’t know where I was going with statement…

My thought process may have had something to do with the fact that a large percentage of young (Gen Z) people want to be influencers, likely flooding the market, and helping to make that profession rather more difficult than many people would think.

More generally speaking, I wonder how education will change in the next few years. Obviously people still need to know how to write, but it will be ever-more tempting to just tell Mr. GPT to do your homework for you, and very hard to police. One could see future society’s mental skills–once (selectively) honed to a razor’s edge by overcoming complicated business challenges and performing scholarly pursuits–becoming rather dull. The world’s knowledge base, in turn, will become more polluted as AI papers are used as AI source material… over, and over, and over again, until we have total informational slop. I touch on this a bit more in my AI: The End of Writing as a Profession or a New Beginning? post.

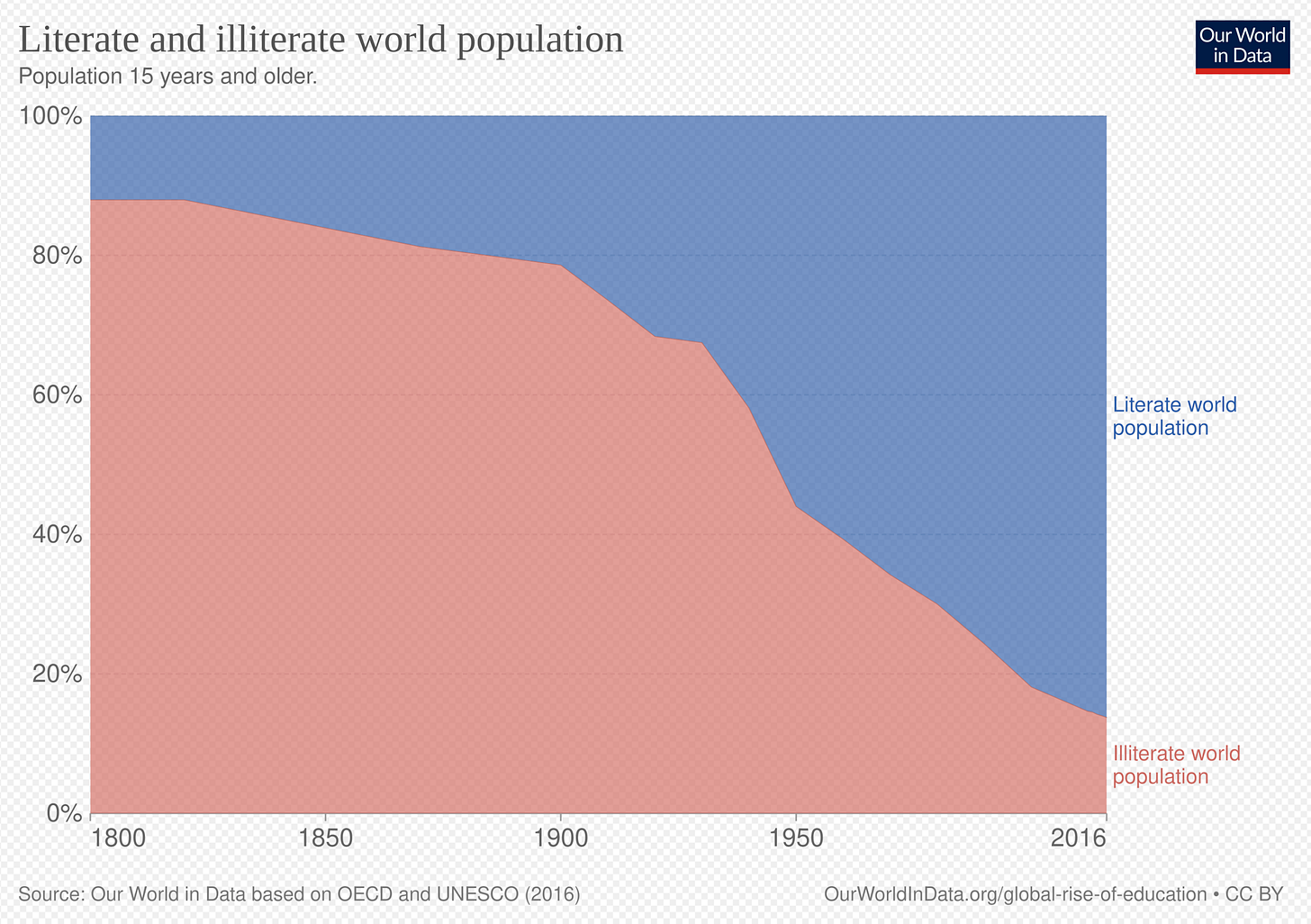

Take this a few steps further, and we could have a broad informational middle class so entirely coddled by AI that those who are raised in technologically backwards civilizations will actually be more mentally capable than those “higher up” on the societal ladder. One might even foresee a situation where those who receive a truly first-class, highly controlled education (i.e. no AI cheating) may have more of an advantage than any time since the late 1800s, when widespread literacy was just starting to take root.

That seems a little bleak. But maybe it gets better:

(Optimistic) silver lining: more total primary, actual knowledge, and useful labor

I met a physicist about a year ago who had worked for CERN in Switzerland, but now works for Google and makes more money optimizing icons than she did at her (to me very impressive) previous job.5 While there are other factors at play in her case (e.g. proximity to family), I think we can all agree that understanding the wonders of how our world works at a subatomic level is more valuable to society than a tiny bit of extra advertising revenue for Alphabet/Google.

Suppose that her current job gets taken over by AI, along with the mundane (but profitable) read-understand-regurgitate work that sustains many writers. For that matter, maybe robo-taxis take the jobs of people who could be very useful in other roles (e.g. the recent US immigrant who was a doctor in his home country, but isn’t qualified to practice here).

This will mean some short or even medium-term pain for those affected, and possibly some sociological issues need rethinking. However, if these people were able to fit into roles that would benefit society even more, it’s possible we will be better off in the long-run.

The above examples would allow very intelligent people to more closely align with their best output, but many people driving taxis–or even doing basic writing or administrative tasks–don’t have the intelligence to work at CERN6 or to practice medicine. Some of these people would certainly be better suited for more valuable manual labor jobs than turning a steering wheel (especially those that take a bit of brawn and a certain type of intelligence–e.g. carpenters, plumbers, electricians). Such professions seem to be in short supply these days after pushing so many into higher education.

If such people and professions “AI’d” out of a job are contributing to society’s knowledge and prosperity in a different way, then maybe we’ll see an explosion of competence and productivity unlike anything the world has known before.

Or you might say I’m being overly optimistic. After all, if someone is AI’d from his or her job, another one doesn’t magically pop up out of the blue. And if the worst parts of what I wrote about above come to fruition, these benefits will be largely wiped out and/or restricted a a small sliver of the population. All valid arguments, but why not conider the best case scenario once in a while?

Where do we go from here?

Perhaps we need some sort of a minimum age for the use of AI, or even a sort of “driver’s license” that grants you access to this technology. Maybe it should even be a yearly test to prove your ongoing general competence. I’m not holding my breath, but if we’re going to reap the potential rewards of AI, we need to at least give the consequences to our mental prowess some thought.7

Sharing is caring👇 (depending on the context). Subscribing is also very good.

Thanks for reading! I hope you will follow along as I post weekly about engineering, technology, making, and projects. Fair warning: I am a native Florida man, and may get a little off-topic in the footnotes. Maybe I even had an alligator or two as pets growing up. Perhaps they are alive today and could be used to test earth-wormhole pet friendliness.

Any Amazon links are affiliate

Addendum/Footnotes:

As long as it doesn’t self-reinforce its on mistakes. Consider that if AI writes a summary of an article with facts X and Y that is published somewhere and is a little bit off, the next AI summary could be even further off from the true facts X and Y… and maybe Z. After a few cycles we have something that is entirely inaccurate. If this continues over and over, far and wide on the Internet, we have a situation where this glorious Information Superhighway becomes more of a Misinformation Superhighway.

Some might argue it’s already that per political bias etc, but the idea that even rather non-controversial (but still very important) technical things will become less and less reliable really bums me out. If I’m ultimately replaced by AI, I can get another job, but if the world’s information supply is forever tainted are we back to traveling to the library for facts that we take for granted–literally in the palm of our hands?

And that’s not meant as a slight on libraries in the least. If anything it’s an argument for their preservation as a bulwark from the coming info-pocalypse.

One could argue that AI companies need to pay primary creators for the informational value they bring to the world, and rely on non-AI sources for “primary truth.” On the other hand, does me looking up something on ChatGPT and verifying/putting my own spin on it count for less than something I just pulled out of my head? It’s a lot to consider, especially when it comes down to your average Substack author and the like.

Notably, I don’t write this sort of article Embedded Computing Design at the moment, so I suppose even this is questionable. They go through business phases like any other entity and the work ebbs and flows at times.

I do still present an online training class for them each month, so one might say that this is leaning even further into the nontraditional publishing realm. I think I’ve had this post in draft/scheduled form since before that work dropped off, at least for now. Also see footnote 3.

Some of the projects seen there were paid for by Hackster, some are my own.

Video is also a bit part of what I do now, whether that is in the form of online classes, trying out new equipment, or other technical content. I think more and more video will be able to supplant human actors (and it already is in some rather quality content), but, similar to writing, if you need to get your hands on something, while being comfortable on camera, and possessing technical expertise, that is a rare set of skills that AI just can’t do for the foreseeable future.

Video is more labor intensive than text, but if I, and we, can charge in such a way that this correlates to time put in, then it could be a net positive. Whether your content is written, or makes that transition to video, as explored in this separate post, I still think that today its especially important to let your personality come out and to develop a consistent fanbase/brand for lack of better terms.

Given how many sequels and spinoffs of both movies (~31 Jurassic Park, ~18 Indiana jones) there have been over the last half-century or so, “a certain age” could apply to just about anyone in today’s workforce, and those entering in the next 20-ish years.

And since you asked, Star Trek clocks in at somewhere in the 125 range!

Finally, between when I originally wrote this draft, and when I picked it up later, I totally forgot what I was going to say beyond the two pop-culture references. Still, a good first sentence, so I felt like I needed to fill in the rest.

I may be getting the precise details off slightly here, but the story is directionally accurate.

Would I be intelligent enough? No idea, but I certainly don’t have the education for it, at least outside of a support role. They may laugh at mechanical engineers there in the same way that they often mock Howard Wolowitz for merely having a masters degree from MIT on The Big Bang Theory.

Like how we don’t need to be as strong today as we did before the invention of the wheel. Perhaps it takes more dedicated effort to stay strong and in-shape today than it did at one time (neglecting the ever-present danger of famine and/or malnutrition), meaning we need some time on the treadmill et al. Maybe it’s the same with our brain function and the advent of AI.

Coincidentally, my friend Pocket83 called me on the day before this published to tell me about how he’d been using Google’s AI service as a sort of sounding board for philosophical considerations. His feeling was that it was great in that role, and geared more towards big picture thinking than the mundane details.

To be fair, I haven’t used Google’s service much. Given that I often want AI to confirm a specific fact or two, this can be annoying to me at times.